Introduction to AI Ethics

AI ethics is an evolving field that examines the moral implications and societal impacts of artificial intelligence technologies. As AI systems become increasingly integrated into our daily lives, the need to address ethical concerns surrounding their development and deployment becomes paramount. This encompasses a wide range of considerations, from data privacy to algorithmic bias, ensuring that intelligent systems not only function effectively but also align with human values and rights.

The importance of AI ethics lies in its potential to shape the trajectory of technological advancement in beneficial ways. With AI systems capable of making decisions that directly affect individuals and communities, stakeholders—including developers, policymakers, and the public—must navigate the ethical landscape thoughtfully. This entails fostering transparency in AI operations, promoting fairness in automated processes, and safeguarding against the misuse of technology. As we progress toward 2025 and beyond, it is essential to recognize that AI innovation carries both significant opportunities and serious risks.

Challenges associated with AI ethics include the complexity of regulatory frameworks, the rapid pace of technological change, and divergent perspectives on what constitutes ethical AI behavior. Moreover, the potential for unintended consequences raises critical questions regarding accountability and responsibility. As intelligent systems continue to evolve, it is vital to engage in robust discourse surrounding these issues to prevent technologies from perpetuating inequalities or infringing on fundamental human rights.

The responsible approach to AI innovation demands a balance between encouraging creativity and ensuring ethical standards. Consequently, AI ethics serves as a guiding principle in navigating the future of intelligent systems, allowing stakeholders to harness the transformative power of AI while mitigating its inherent risks. By prioritizing ethical considerations, we can work towards a future where AI technologies enhance societal well-being and promote justice.

The Landscape of AI in 2025

As we approach 2025, artificial intelligence (AI) is set to undergo significant advancements, particularly in the realms of machine learning, natural language processing, and computer vision. These technologies are evolving at a rapid pace, pushing boundaries and opening up new possibilities across various sectors. The capabilities of machine learning algorithms are expected to enhance their ability to analyze vast datasets, providing more accurate predictions and insights. This advancement can directly influence sectors like finance, where algorithmic trading and risk assessment will become increasingly sophisticated.

Natural language processing (NLP) is anticipated to experience remarkable improvements as well. AI systems will likely become adept at understanding context and sentiment, enabling them to engage in more meaningful interactions with humans. This will greatly benefit industries such as customer service and marketing, where personalized communication can drive engagement and satisfaction. The potential for these advancements also raises ethical questions—how will we ensure that AI is used responsibly in these contexts?

In the field of computer vision, innovations are expected to progress in automation and object recognition. This can enhance efficiencies in sectors such as transportation, where self-driving vehicles rely heavily on computer vision technologies. The implications for safety, law enforcement, and urban planning are profound and will need careful consideration as policies adapt to accommodate these changes.

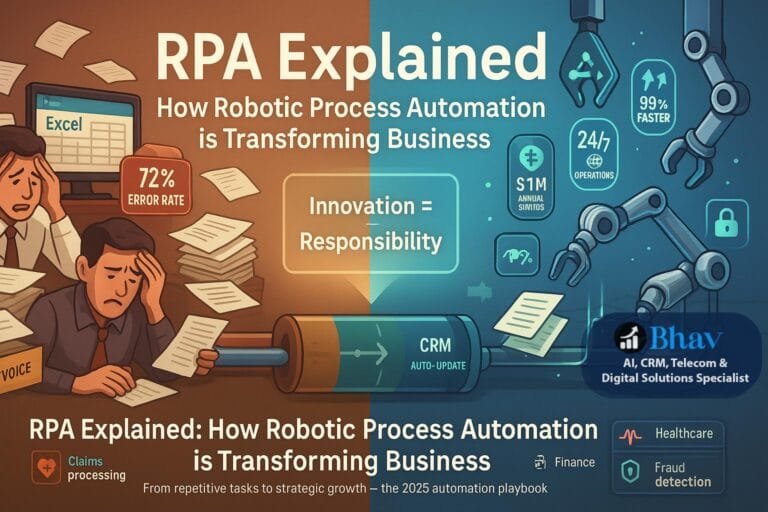

Entertainment, too, will see a transformation, with AI-generated content potentially reshaping storytelling, video games, and virtual reality experiences. As we explore these advancements, it is crucial to examine the ethical considerations that arise, such as the impact on employment, privacy implications, and the potential for bias in AI systems. Thus, understanding the landscape of AI in 2025 will be vital for navigating the balance between innovation and responsibility in the field.

The Legal Framework for AI Ethics

The rapid evolution of artificial intelligence (AI) technology has necessitated the development of comprehensive legal frameworks that govern its ethical implementation. Currently, numerous regulations and international agreements are in place, yet they often lag behind the pace of innovation. Existing frameworks struggle to address the unique challenges posed by AI, particularly regarding accountability, transparency, and fairness. These challenges highlight the urgent need for policymakers to consider legal structures that will keep pace with technological advancements, ensuring the responsible use of AI.

Legal frameworks concerning AI ethics typically comprise a mix of national laws, regional directives, and international treaties. In various jurisdictions, lawmakers are working on specific regulations aimed at controlling the deployment of AI technologies, particularly in sensitive areas such as healthcare, finance, and law enforcement. For instance, the European Union has introduced the Artificial Intelligence Act, which categorizes AI systems based on their risk levels and establishes obligations for high-risk applications. Such regulatory efforts underline the growing recognition of the need for a unified approach to AI ethics.

However, the complexity of AI technologies poses significant challenges for policymakers. Traditional legal principles may not be directly applicable to AI systems, which can change and learn autonomously. This results in uncertainties surrounding liability in cases of harm, discrimination, or privacy violations caused by AI systems. Moreover, the global nature of technology necessitates international cooperation in developing ethical standards and legal instruments that can transcend national borders.

Creating a robust legal framework that addresses these issues will require collaboration among lawmakers, technologists, ethicists, and industry stakeholders. Such partnerships can ensure that AI is developed and deployed responsibly while augmenting innovation. This holistic approach is vital to fostering public trust and advancing societal benefit through AI technologies.

Key Ethical Issues in AI

The rapid advancement of artificial intelligence (AI) technology has ushered in significant ethical challenges that necessitate diligent scrutiny. One of the foremost issues is the potential for bias and fairness in AI systems. Algorithms may inadvertently perpetuate existing societal biases, leading to discrimination in critical domains such as hiring, law enforcement, and credit assessment. For instance, an AI-driven hiring system trained on historical data may favor male candidates over equally qualified female candidates, thus reinforcing gender inequality in the workplace.

Another pressing concern is privacy. As AI systems analyze vast quantities of personal data to enhance their predictive capabilities, the potential for misuse grows. High-profile incidents, like the Cambridge Analytica scandal, illuminate the risks of data exploitation where users’ information was leveraged to manipulate political outcomes. The ethical implications of data privacy are profound, raising questions about informed consent and the degree to which users control their data.

Accountability in AI deployment is also a critical ethical issue. Who is responsible if an AI-driven decision leads to harmful consequences? This question is exemplified by autonomous vehicles, which may one day cause accidents. In such cases, the determination of liability—whether it lies with the manufacturer, the software developer, or the vehicle owner—remains a contentious topic. The lack of clear accountability frameworks can undermine public trust in AI technologies.

Lastly, transparency in AI operations is paramount. Many AI systems, particularly those employing deep learning techniques, function as “black boxes,” making it difficult to uncover how decisions are made. This lack of transparency can exacerbate public apprehension about AI’s capabilities and intentions. Educating stakeholders and promoting transparency are crucial for addressing these ethical dilemmas and fostering a trustworthy relationship between technology and society.

Balancing Innovation and Ethical Responsibility

As we look towards 2025, the imperative of balancing innovation with ethical responsibility in the realm of artificial intelligence becomes increasingly pressing. Rapid advancements in AI offer unprecedented opportunities for creativity and transformation across various sectors. However, this pace of innovation often raises ethical concerns surrounding privacy, security, and societal impact. Organizations must navigate this delicate landscape, ensuring that their pursuit of technological progress does not compromise ethical standards.

One strategy for achieving this balance is through the adoption of robust ethical frameworks. These frameworks serve as guidelines for decision-making processes and help instill a culture of responsibility within organizations. By incorporating ethical considerations into the innovation process, businesses can foster an environment where creative solutions align with ethical values. For instance, companies like Microsoft have established responsible AI principles that govern their design and deployment of AI technologies. This proactive approach encourages teams to think critically about the societal implications of their innovations.

Case studies from various industries illustrate that it is indeed possible to harmonize innovation and ethical accountability. In the automotive sector, for example, firms are integrating AI into self-driving technology while simultaneously prioritizing safety and regulatory compliance. By actively engaging with stakeholders and addressing potential risks associated with the technology, these companies are not only pushing boundaries but also cultivating trust among consumers.

Moreover, organizations can foster open dialogue and collaboration among multidisciplinary teams to enhance their ethical oversight. Encouraging diverse perspectives allows for a more comprehensive understanding of the potential impacts of AI on different demographics. The key lies in recognizing that embracing innovation does not necessitate sacrificing ethical considerations; rather, both can coalesce to drive responsible yet groundbreaking advancements in artificial intelligence.

Stakeholder Responsibilities in AI Ethics

The rise of artificial intelligence (AI) technologies has transformed numerous sectors, leading to an increased focus on the ethics governing these innovations. As AI systems become more embedded in daily life, it is essential to delineate the responsibilities of various stakeholders in the AI ecosystem. Developers, corporations, regulators, and consumers play critical roles in ensuring that ethical considerations are at the forefront of AI deployment.

Developers are tasked with creating AI systems that prioritize fairness, transparency, and accountability. This involves designing algorithms that are not only efficient but also devoid of bias, ensuring that the outcomes produced by AI tools serve society equitably. Developers must also engage in continuous learning to understand the implications of their work, ensuring that ethical considerations are integrated at every stage of the development process.

Corporations that leverage AI technologies bear the responsibility of implementing ethical standards across their operations. This includes conducting regular audits of AI systems to identify potential biases and risks. Corporations should adopt practices that promote responsible AI usage, fostering a culture of ethical awareness among employees and stakeholders. Support for research initiatives that investigate the ethical implications of AI systems is equally vital for building a sustainable AI framework.

Regulators are responsible for establishing laws and regulations that govern the use of AI technologies. This encompasses forming guidelines that protect consumers and promote accountability among AI developers and corporations. Regulators must remain adaptable, continuously updating policies to keep pace with the rapid evolution of AI technologies while ensuring that the rights and privacy of individuals are adequately safeguarded.

Lastly, consumers have a crucial role in advocating for ethical AI. By demanding transparency and fairness from companies, consumers can drive market changes that prioritize the ethical use of AI technologies. Constructive feedback and engagement with AI systems serve as a mechanism for holding developers and corporations accountable.

Collaboration among these diverse stakeholders is essential for advancing ethical AI practices. By working together, they can create a balanced approach to innovation and responsibility that benefits society as a whole.

Ethical AI: Successful Implementations and Best Practices

The implementation of ethical AI practices serves as a vital cornerstone for organizations striving to develop responsible artificial intelligence technologies. In recent years, numerous companies have set exemplary standards by carefully integrating ethical considerations into their AI operations. One notable example is Microsoft’s AI for Good initiative, which seeks to harness artificial intelligence to tackle large-scale societal challenges, such as climate change and health disparities. By adopting a transparent and socially responsible approach, Microsoft has showcased how ethical AI can have a profound positive impact while addressing critical issues affecting communities worldwide.

Another prominent example is IBM’s commitment to responsible AI through its Watson technology. By establishing the AI Ethics Board, IBM emphasizes the importance of fairness, accountability, and transparency in AI systems. The company ensures that their AI applications are developed in alignment with ethical guidelines, addressing potential biases and discrimination. Such efforts contribute not only to the trustworthiness of AI output but also foster broad acceptance among users and stakeholders.

To promote responsible AI use across various industries, organizations can adopt best practices that encourage ethical guidelines. One approach is to conduct regular assessments and audits of AI algorithms to identify biases and mitigate risks related to privacy and discrimination. Additionally, implementing diverse AI development teams can enhance perspective representation and reduce the possibility of inherent biases in algorithms. Establishing open channels for stakeholder feedback further allows for the identification of unintended consequences and fosters a collaborative atmosphere for continuous improvement in AI ethics.

Ultimately, by focusing on these best practices, organizations can successfully integrate ethical considerations into their AI systems, ensuring that innovation is continually balanced with responsibility. The pursuit of ethical AI not only enhances the credibility of AI systems but also fosters a more equitable and just digital future, benefiting society as a whole.

Future Trends in AI Ethics

As we look ahead to 2025 and beyond, it is crucial to consider the evolving landscape of artificial intelligence (AI) ethics. One significant trend is the increasing influence of public opinion on AI development and implementation. With the rise of social media and online platforms, the public is becoming more vocal about their concerns regarding AI technology. This shift is leading organizations and policymakers to take ethics more seriously, as they seek to align their innovations with societal values and expectations. Stakeholders now recognize that public trust is essential for the success of AI initiatives, and as such, there will be ongoing dialogues between tech companies and the communities they serve.

Another vital trend will be the advancement of technologies that promote ethical practices in AI. Innovations such as automated auditing systems and explainable AI are becoming increasingly important. These tools are designed to ensure that AI algorithms operate fairly and transparently, significantly reducing biases and fostering accountability. The development of such technologies will facilitate the ethical use of AI by offering mechanisms for evaluation, thereby helping organizations to navigate complex moral terrain while maintaining a positive public image.

Moreover, international movements advocating for AI transparency and accountability are gaining traction. Initiatives such as the Partnership on AI and global collaborations among tech companies, researchers, and policymakers aim to establish standards and best practices for ethical AI use. As more countries participate in these conversations, there will be a growing push for legislation that enforces ethical compliance in AI development. This collaborative approach will help mitigate risks associated with AI while promoting responsible innovation that prioritizes human welfare and dignity.

Conclusion: The Path Forward for AI Ethics

As we look forward to 2025, it is increasingly evident that the development and integration of artificial intelligence must prioritize ethical considerations alongside technological advancements. Throughout this discourse, we have examined the delicate balance that must be struck between fostering innovation and adhering to ethical standards. It is imperative that developers, policymakers, and stakeholders engage in collaboration to establish frameworks that govern the responsible use of AI. This collaboration will play a crucial role in ensuring that AI serves as a tool for societal good rather than a source of harm.

The potential benefits of AI are vast, encompassing significant enhancements in efficiency, decision-making, and even creativity. However, these advantages come with substantial ethical implications that cannot be ignored. Issues relating to bias, privacy, and the potential for job displacement highlight the necessity of ethical scrutiny. By taking a proactive stance, stakeholders can mitigate risks and foster an environment where AI technologies are not only innovative but also socially responsible.

Furthermore, education plays a vital role in shaping the narrative around AI ethics. Efforts must be made to raise awareness and understanding of these issues among technology creators and consumers alike. Developing educational programs focused on AI ethics can empower individuals to engage meaningfully in discussions about the impact of AI on society.

In summary, the path forward for AI ethics entails a concerted effort from all parties involved. It is essential for industry leaders, governments, and the public to work collectively in crafting policies that prioritize ethical guidelines while embracing innovation. By doing so, we can create a future where AI technologies are leveraged responsibly, ensuring they contribute positively to society and address ethical challenges effectively.